100 Deep Q&A on AI, Paywalls, and Content Monetization

Content is created with a help of Gemini. 🙂

In summary, the blog post serves as an excellent strategic playbook for creators and publishers, arguing convincingly that the future of content monetization lies not in keeping AI out, but in finding ways to make AI pay for content access and training.

The future of content isn’t keeping AI out—it’s making AI pay to use it.

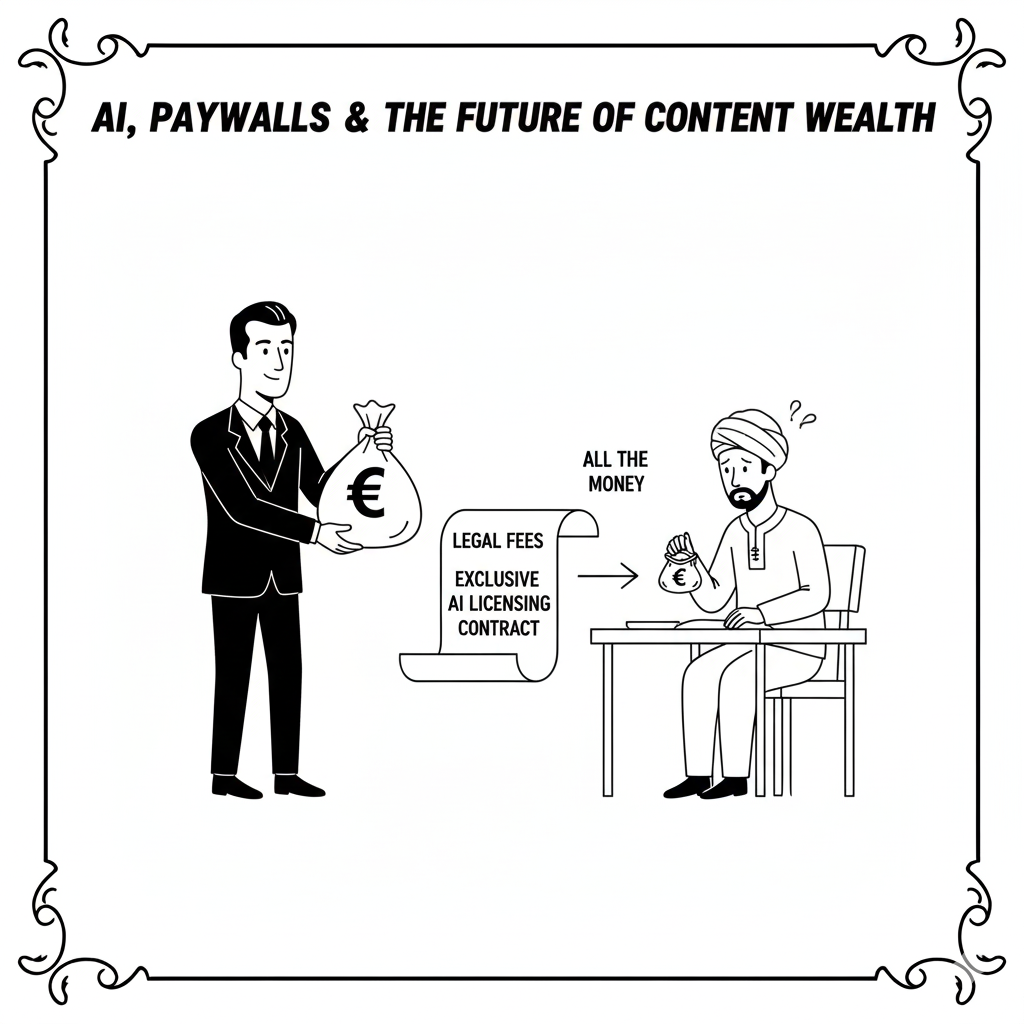

The “AI Pays, Users Read for Free” Model (The Reverse Paywall)

The Reverse Paywall is hypothetical model and provocative idea used in this text.

1. What is the core concept of the “Reverse Paywall” ?

It’s a hypothetical model in which an AI company pays content publishers for access to their material, allowing human users to access the content—or AI-generated derivatives like summaries—for free, subsidized by the AI’s payments.

2. What is the main benefit for the publisher in a Reverse Paywall model?

Publishers receive guaranteed, scalable revenue from a well-funded AI company, turning AI access into a predictable income stream rather than losing value to scraping or unlicensed use.

3. What is the main benefit for the AI company?

The AI gains a competitive advantage by legally offering users high-quality, premium, or up-to-date content that competitors without licensing deals cannot access or summarize.

4. What existing business practice is closest to the Reverse Paywall?

Large-scale licensing deals between AI companies (e.g., OpenAI, Google) and major news organizations (e.g., Associated Press) for bulk access to archives and real-time content.

5. What potential revenue source could an AI company use to fund the Reverse Paywall?

Revenue from profitable enterprise AI services or limited, unobtrusive advertising in the free user tier could subsidize the cost of paying publishers.

6. Why is this model difficult to implement widely?

It requires broad industry participation, standardized pricing across thousands of publishers, and proof that the financial upside is better than relying on public or open data.

7. How would the AI company verify that users are reading content it paid for?

It may not track individual user behavior; instead, it could pay bulk fees based on user base size or on the estimated contribution of the publisher’s content to training and outputs.

8. What is a key ethical concern for the content creator under this model?

Creators may lose their direct relationship with readers, and the AI might deliver only a summary instead of presenting the full article in the publisher’s intended format.

9. How does this model change the definition of a “subscriber”?

The AI company effectively becomes the main institutional subscriber, purchasing bulk access, while human users become end beneficiaries of the AI’s paid access.

10. What is a risk to the publisher if the AI summarizes the content too effectively?

High-quality AI summaries may reduce direct traffic to the publisher’s website and decrease traditional subscriptions, potentially creating long-term dependence on AI-generated revenue.

Pay-per-Crawl (PPC) and AI Training Data

11. “Pay-per-Crawl” (PPC) mechanism.

A technical protocol and pricing model that allows a content owner to charge an authenticated AI crawler a fee for each page request, turning access into a transactional, per-use revenue stream.

12. What company is associated with introducing a formal PPC protocol?

Cloudflare, which provides the infrastructure for websites to manage, authenticate, and charge AI crawlers for access. And what if CLoudFlare goes down?

13. Is PPC a one-time, unlimited right or a usage-based fee?

It is a usage-based micropayment applied to each crawl or page request.

14. What standard HTTP code is used by the PPC protocol to signal a price?

HTTP 402 “Payment Required,” a reserved status code repurposed to initiate payment between the site and the authenticated AI bot.

15. Why is PPC a form of access control?

It requires AI companies to identify their bots, authenticate themselves, and agree to payment terms before content is delivered—making access transparent and compensated.

16. What is the publisher’s primary goal in using PPC?

To receive compensation for content used in AI training, addressing concerns that such use often occurs without payment under “Fair Use” arguments.

17. Does paying a PPC fee mean the AI has the right to use the content permanently?

No. The fee covers only the data acquisition event; subsequent use is still governed by copyright and the licensing terms.

18. What is the alternative to PPC for publishers who don’t want AI access?

They can block AI crawlers via robots.txt (e.g., disallowing GPTBot or Google-Extended).

User-agent: GPTBot Disallow: /

Blocks GPTBot (OpenAI's crawler) from accessing any part of your site.

User-agent: Google-Extended Disallow: /

Blocks Google’s extended crawler (used for features like AI training or Knowledge Graph) from your entire site.

User-agent: Googlebot Allow: /

Allows the standard Google search crawler full access, so your pages can still appear in Google search results.19. Why do many AI companies prefer paying a bulk license over PPC?

PPC introduces high per-transaction complexity and overhead, whereas a bulk license offers a single predictable cost and often grants access to an entire archive.

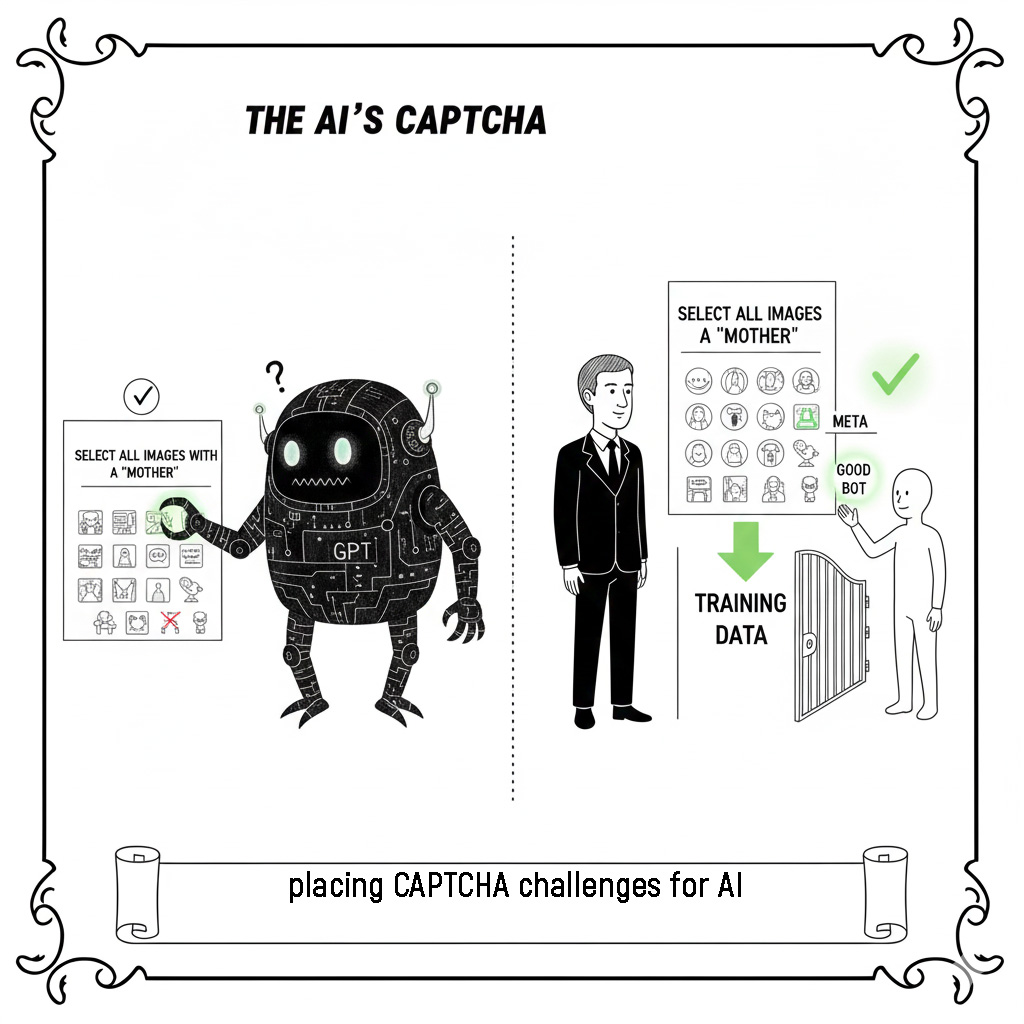

20. How does PPC help publishers identify “good” bots from “bad” bots?

Authenticated AI companies that follow the PPC protocol are recognized as legitimate, while bots ignoring the protocol are easily flagged as unauthorized scrapers and can be blocked.

The Problem of Protection and Post-Crawl Use

21. What is the fundamental problem with protecting content after it’s used for AI training?

Once data is integrated into a model’s parameters—its “knowledge”—it cannot be fully removed without retraining the entire model.

22. What is the only true enforcement mechanism against an AI model utilizing scraped content?

Copyright law and litigation. A court order is the only mechanism that can compel an AI company to alter a model, pay damages, or restrict usage.

23. What is the primary legal defense used by AI companies for scraping content?

Fair Use (in the U.S.) or Fair Dealing (in other regions), arguing that model training is a transformative act because the output is not a direct copy but a new creation.

24. Define “Transformative Use” in the context of AI training.

Using content not to reproduce it but to derive new patterns, relationships, and statistical structures that form a new tool—the trained model.

25. What constitutes a “non-transformative” or infringing AI output?

Any output that is verbatim or nearly verbatim to the original copyrighted material, effectively functioning as an unauthorized copy.

26. What is the technical mechanism content owners can use to prevent the initial scrape (besides PPC)?

A robots.txt file, which instructs compliant crawlers not to access certain directories or content.

27. What is the limitation of the robots.txt file?

It is honor-based. Malicious or non-compliant scrapers can simply ignore it and crawl the site anyway.

28. How does data obfuscation attempt to protect content?

By complicating how text is displayed—using heavy JavaScript, turning text into images, or fragmenting HTML—to make basic scraping tools fail.

29. Why is data obfuscation often ineffective against modern AI?

Modern AI scrapers use headless browsers capable of executing full JavaScript and rendering pages like a human user, defeating basic obfuscation techniques.

30. What is the role of the EU AI Act regarding training data protection?

It introduces transparency requirements that force AI companies to publish detailed summaries of copyrighted sources used during training, helping rightsholders identify misuse and enforce their rights.

Legal and Ethical Gray Areas

31. Why are so many legal precedents being set right now regarding AI training?

Because copyright laws were created long before generative AI existed, courts are now forced to interpret outdated concepts—such as “copy” and “derivative work”—in the context of entirely new AI processes.

32. If an AI pays for content but then violates the Terms of Service (ToS), what legal claim results?

A Breach of Contract claim, which is separate from (and can exist alongside) a claim of Copyright Infringement.

33. What is the concept of “Efficient Infringement” in this context?

It’s the idea that scraping content first and paying penalties later can be more profitable for AI companies than obtaining permission upfront, because the value of the data often exceeds the cost of potential fines.

34. What is a key ethical argument against allowing free scraping for training?

It weakens the economic incentives for humans to create high-quality, verified content—the very material AI systems rely on to produce accurate and valuable outputs.

35. Why is identifying the source of infringing AI output so difficult?

AI models blend billions of data points into statistical representations, making it extremely difficult to trace any specific output back to an individual source—this is often called the “black box” problem.

36. What is “Model Extraction” and why is it a threat to AI companies?

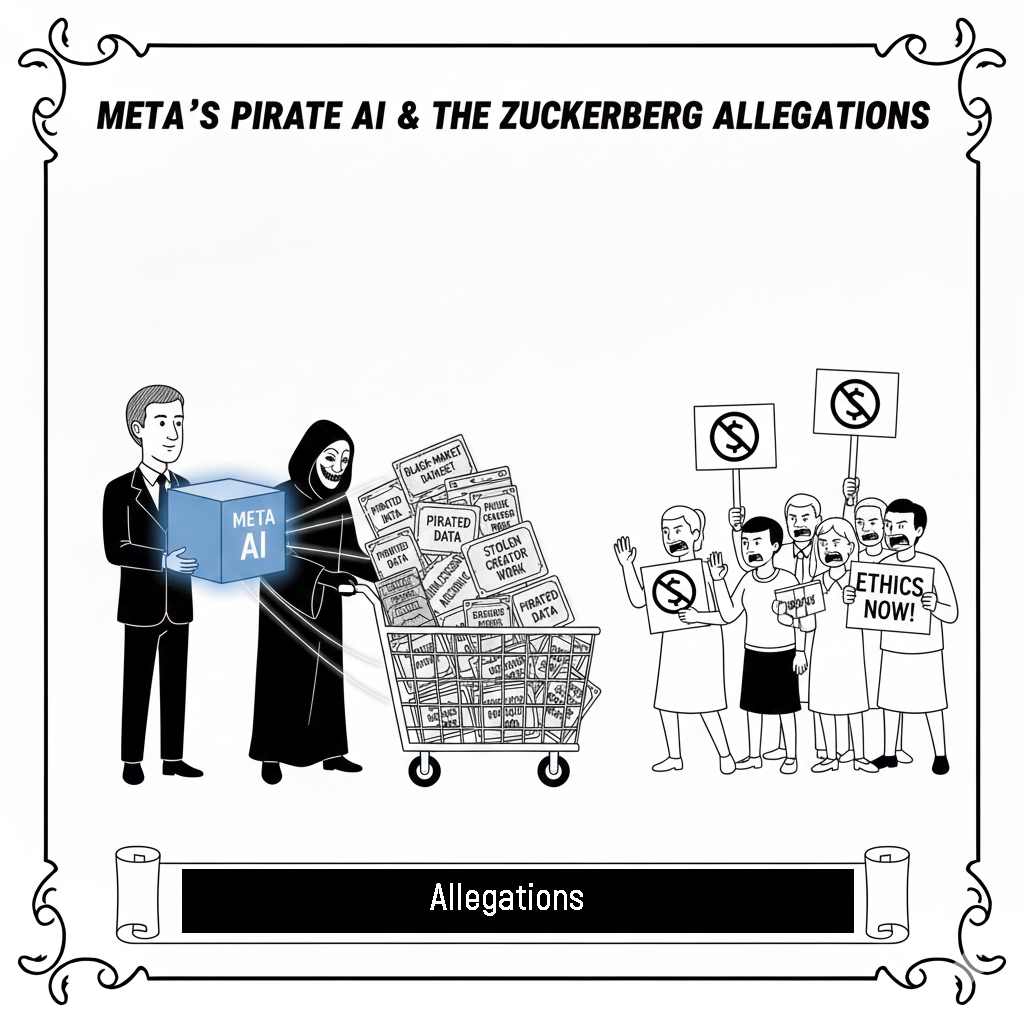

Model extraction uses strategic queries to make a proprietary model reveal its training data or replicate its internal structure, effectively stealing the AI company’s intellectual property.

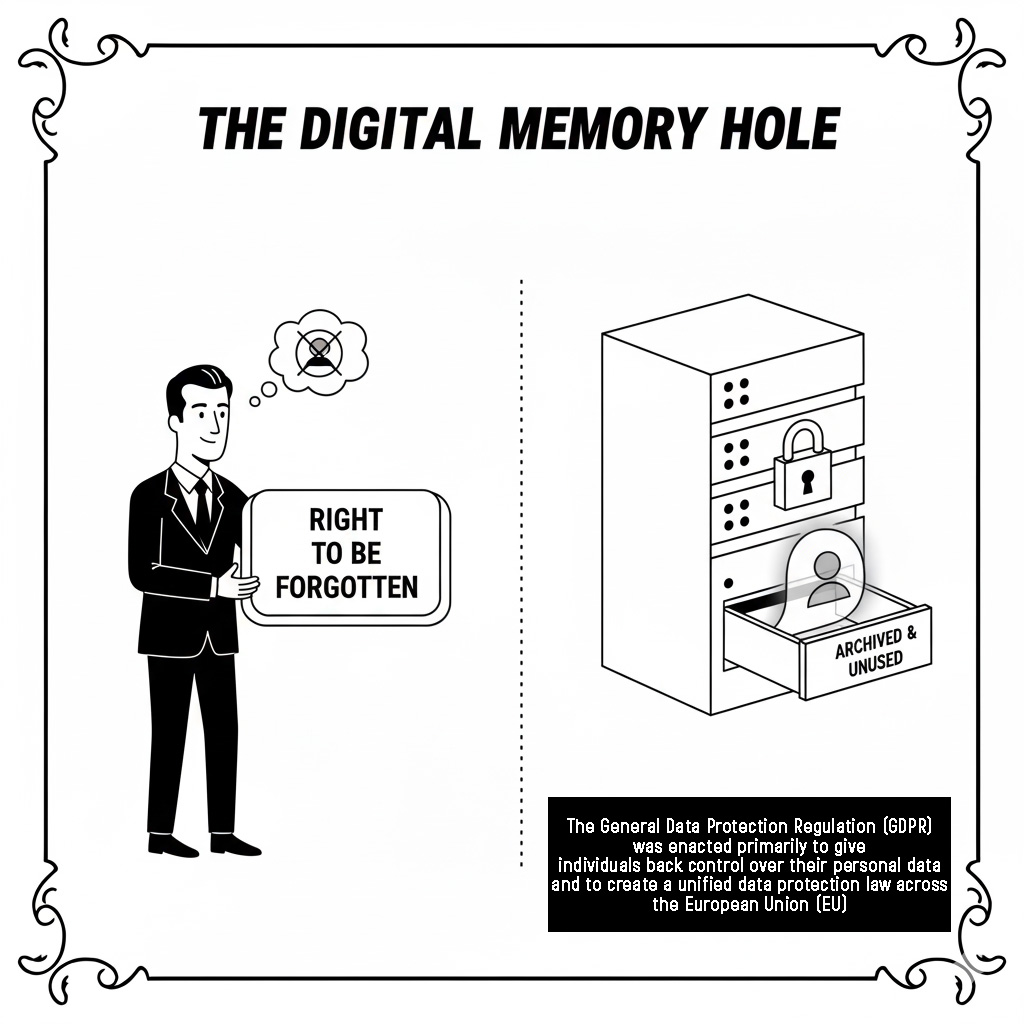

37. What is the “right to be forgotten” and how does it conflict with AI training?

It’s the right for individuals to have personal data removed. This conflicts with AI training because it is nearly impossible to delete specific data from a trained model without retraining it entirely.

Stop Meta using your data to train AI

38. What is the role of Synthetic Media in content protection?

AI-generated synthetic text, audio, or video can obscure the origin of content, making it harder for publishers to verify ownership or detect unauthorized reuse.

39. What is the ethical dilemma of using publicly available, non-paywalled content for free training?

Even though it may be legal, it raises the question of whether “publicly available” should equate to “free for commercial exploitation,” especially when AI systems may compete with the original creators.

40. What is Watermarking in the context of AI-generated content?

Watermarking means embedding hidden signals or metadata into AI outputs to prove they were generated by a specific model, aiding in authenticity verification and misuse detection.

Business Models and Economic Impact

41. How does the existence of AI change the publisher’s monetization strategy?

Publishers must shift from monetizing user access and clicks—both of which AI can bypass—to monetizing licensing and data rights, which AI systems require.

42. What is the economic term for AI training data being an “input” resource?

A Factor of Production. Content functions as a crucial input for AI models, making its cost and availability strategically significant for the tech industry.

43. How can content creators leverage the API economy to control AI access?

By restricting crawling and instead offering structured, controlled data through paid APIs, allowing them to monetize both the content and the delivery method.

44. What is the risk of a “Data Black Market” emerging?

Illegally scraped, high-quality datasets from premium publishers could be compiled and sold to smaller or unethical AI developers who want training data without paying for licenses.

45. How can publishers use premium human-in-the-loop services to compete with AI?

By offering high-value content requiring human expertise, deep analysis, and synthesis—areas where AI still generally underperforms compared to top specialists.

46. What does it mean for a publisher to have a “Data Moat”?

It means owning unique, valuable, hard-to-replicate datasets—such as historical archives or specialized scientific data—that AI companies must license to stay competitive.

47. How can AI support the traditional paywall model?

AI can help publishers better categorize, personalize, and recommend content, increasing the value of subscriptions for human readers.

48. What is the economic concept of “Diminishing Returns” for AI training data?

After a certain point, adding more general data yields minimal improvements to model performance, making only rare, high-quality, or specialized data worth paying for.

49. Why is “Trust” becoming a more valuable commodity in the age of AI?

With AI generating vast amounts of information—including errors—readers are increasingly willing to pay for verified, authoritative, fact-checked content from reputable publishers.

50. What is the potential benefit of the Reverse Paywall to small publishers?

It provides a predictable and steady stream of licensing revenue, helping small publishers compete with larger ones that can negotiate bigger, more favorable deals.

Technical and Protocol Details

51. How do major AI companies typically identify their official crawlers?

They use distinct User-Agent strings (e.g., GPTBot, CCBot) in their HTTP requests, allowing publishers to recognize and filter them.

52. What is the technical function of a “Sitemap” file for AI training?

A sitemap lists every page on a site, enabling AI crawlers to efficiently discover, index, and reach content that might otherwise be hidden behind dynamic navigation.

53. What is a “Headless Browser,” and why is it problematic for publishers?

A headless browser is a browser without a graphical interface. It can run JavaScript and simulate real user behavior, making it capable of bypassing basic bot detection and scraping obfuscated content.

54. What is Rate Limiting, and how can a publisher use it against AI?

Rate limiting restricts how many requests an IP can make in a set timeframe. This slows large-scale scraping operations and can make high-volume crawling economically impractical.

55. How can a publisher use CAPTCHA to block AI training?

By placing CAPTCHA challenges before serving content, publishers create a barrier that automated AI bots generally cannot solve reliably without human intervention.

56. What is the purpose of the proposed robots-meta tag (noai)?

It is a voluntary HTML metadata directive that signals the publisher’s preference that their content not be used for AI training, even though it has no binding enforcement mechanism.

57. What is the technical mechanism by which AI “steals” content from a paywall?

By using a paid, authenticated account to access the content, or by exploiting moments where the full article briefly loads in the browser before the paywall overlay appears.

58. How does Differential Privacy apply to AI training?

It is a method that allows models to learn from data while mathematically ensuring that individual data points—especially sensitive personal information—cannot be reconstructed from the model’s outputs.

59. Why is the cost of retraining a large language model a form of protection?

Retraining a major model can cost millions of dollars and take weeks or months, so the threat of being ordered to retrain acts as a strong deterrent against unauthorized data use.

60. What is the main difference between scraping and using a licensed API for AI training?

Scraping is uncontrolled, brittle, and legally risky, while licensed API access is structured, reliable, and governed by explicit contractual terms and pricing.

Deeper Dive into Copyright and Litigation

61. Define “Derivative Work” in the context of AI.

A new work based on one or more preexisting works. Publishers argue an AI model trained on their content is a derivative work that requires a license.

62. Why do courts often rely on the “Market Harm” factor in Fair Use analysis?

Because it examines whether the new use (AI training or output) harms the market for the original work. If the AI competes directly, it weighs against Fair Use.

63. What is the legal status of an AI-generated work in terms of copyright?

The U.S. Copyright Office currently requires a human author. Purely AI-generated works generally cannot be copyrighted.

64. What is the core argument of the New York Times lawsuit against OpenAI?

That OpenAI used millions of copyrighted articles for training and now competes with the Times by producing outputs that substitute for their content.

65. Why is the concept of “De Minimis Copying” relevant to AI?

Because AI often copies extremely small fragments from massive datasets, and some argue this copying is too minor to constitute infringement.

66. What specific form of output is most likely to be ruled as copyright infringement?

Long-form summaries or verbatim reproduction of premium, paywalled content that directly substitutes for the original work.

67. What is a “Remedy” a court might order against an infringing AI model?

Monetary damages, an injunction stopping the use, or an order requiring the purging of infringing data from the model.

68. How do Class Action lawsuits impact the AI-content landscape?

They let large numbers of creators sue collectively, increasing potential penalties and giving creators more leverage.

69. What is the difference between statutory damages and actual damages in copyright?

Actual damages measure the creator’s real financial loss. Statutory damages are fixed amounts per infringement defined by law.

70. What is the primary function of a Collective Licensing Body in this context?

To act as a central intermediary that collects licensing fees from AI companies and distributes royalties to creators.

Implications for Content Quality and Creation

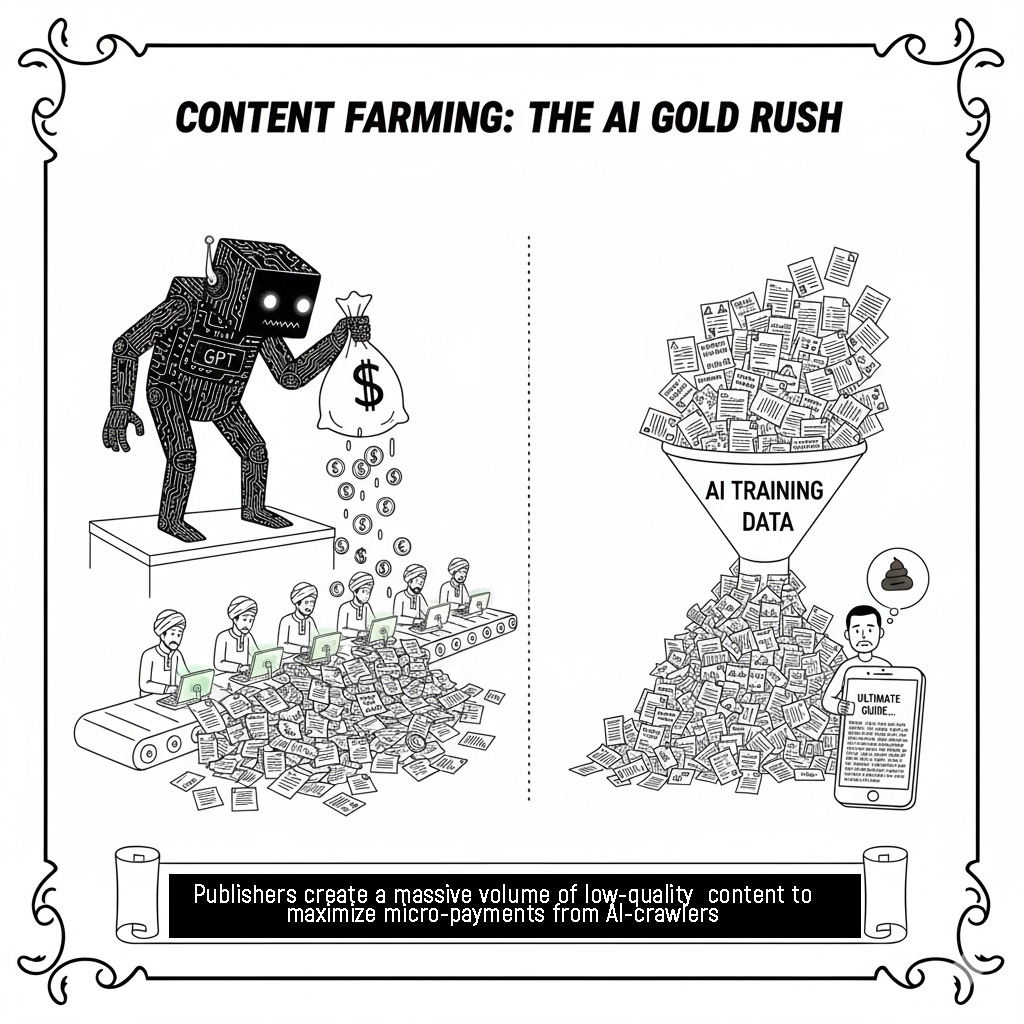

71. If AI pays for everything, what is the risk of “Content Farming”?

It encourages the creation of massive amounts of low-quality, AI-optimized content produced solely to maximize crawl revenue, with little value to human readers.

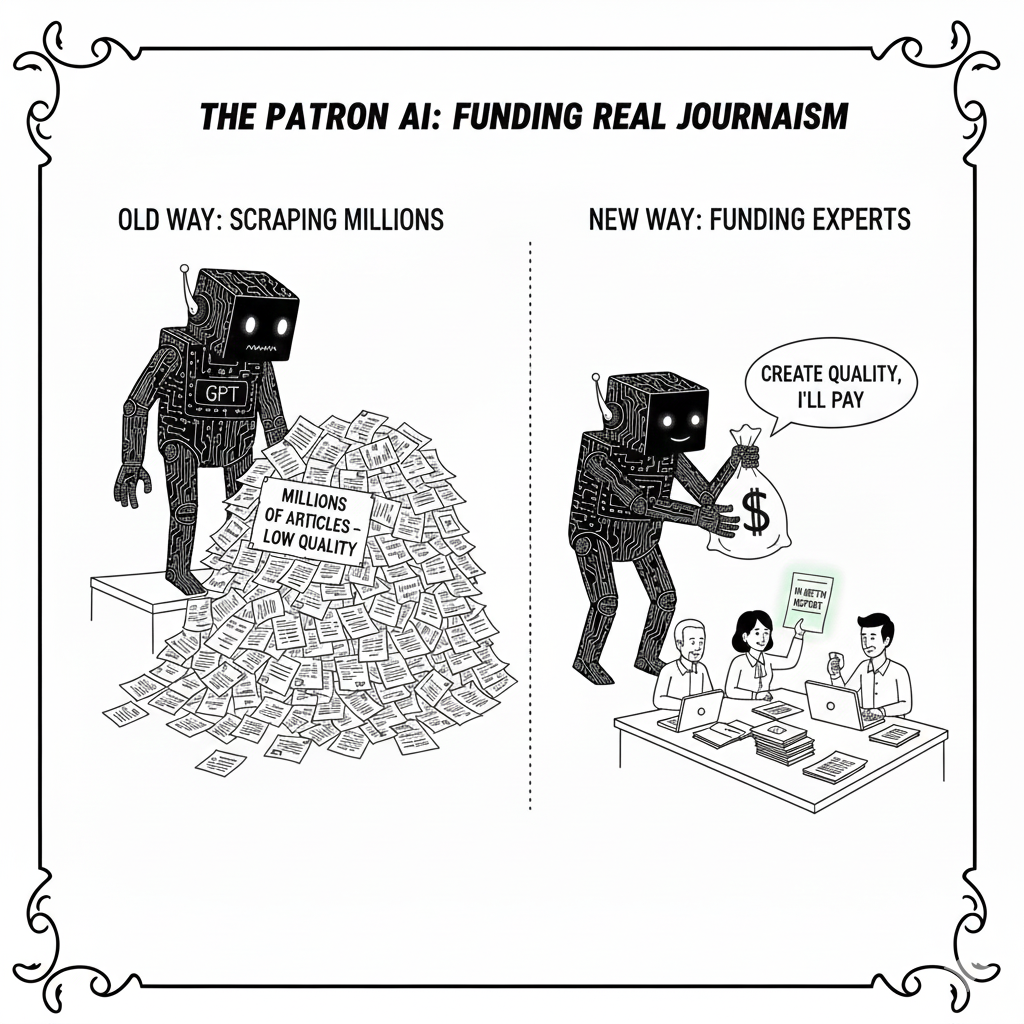

72. How can the Reverse Paywall encourage higher-quality content creation?

If AI companies pay more for unique, authoritative data, creators gain an incentive to produce depth and quality rather than volume.

73. What is the importance of Provenance in AI training data?

It ensures the source, authorship, and trustworthiness of content. AI companies pay more for data with clear provenance because it reduces hallucination risk.

74. Why is “Niche Content” becoming increasingly valuable to AI?

General web data is saturated; specialized or hard-to-access information (industry reports, expert analyses, academic material) provides greater training value and commands premium pricing.

75. How does the AI economy complicate the traditional writer’s contract?

Contracts now must specify who holds the rights to license content for AI training—the writer, the publisher, or the platform.

76. What is the role of Metadata in ensuring content protection and compensation?

Standardized, rich metadata helps AI systems identify ownership, respect licensing terms, and route compensation accurately.

77. What are “Dark Data Sets”?

High-value, often proprietary data collections (internal documents, subscriber-only communities, private databases) intentionally kept off the public web and prized by AI developers.

78. What is the risk of “Filter Bubble” generation in AI training?

If AI trains mostly on data from entities willing or able to license content, the resulting model may become biased, excluding diverse or underrepresented voices.

79. How can an author use blockchain/NFTs to track and monetize AI usage?

By registering content on a blockchain, authors can maintain a permanent record of ownership and automatically charge for every training use via smart contracts.

80. What is the challenge of “Version Control” for AI training?

Content may be updated or corrected, but AI models may have already trained on outdated versions, leading to persistent factual inconsistencies in outputs.

Hypothetical and Future Scenarios

81. Could a user pay to have an AI forget their data?

Hypothetically, yes—through a specialized, paid “un-training” or data-purging service—but the technical feasibility and cost would be extremely high.

82. How could a government enforce a national “AI Tax” on training data?

By levying charges on large-scale AI operations, such as data center usage or GPU consumption, and redistributing the revenue to content creators.

83. What is the scenario of “Ad-Supported AI”?

AI companies could allow users free access to content summaries while embedding personalized advertisements, monetizing users’ attention.

cost of doing business

- The New York Times sues OpenAI and Microsoft for using its stories to train chatbots

- Inside the News Industry’s Uneasy Negotiations With OpenAI

- OpenAI loses fight to keep ChatGPT logs secret in copyright case

84. Could an AI become a “Content Patron”?

Yes. An AI entity could directly fund new works from human creators, ensuring a stream of high-quality, compensated training data.

85. What is the implication of AI being trained on pirated content?

The AI model could be legally “tainted,” exposing the developer to significant liability from copyright holders.

86. How could AI make open-source data less common?

If AI companies pay for content, creators may remove their works from public domains, effectively “enclosing” the internet.

87. What is the concept of a “Personal AI Filter”?

A user-side AI tool that reads incoming content and automatically filters out low-quality or AI-generated material, enforcing quality control from the user perspective.

88. How can a Decentralized AI (trained by a community) manage content licensing?

Through a decentralized autonomous organization (DAO) that collects licensing fees into a shared wallet and pays publishers via smart contracts.

89. What is the “Last Mile Problem” for AI monetization?

The final, most expensive step of improving an AI model often requires access to small amounts of highly proprietary data that are difficult to license.

90. What is the simplest, non-technical solution for a publisher to prevent scraping?

Stop publishing digitally and rely on print-only or highly controlled physical formats, such as specialized newsletters or magazines.

Synthesis and Conclusion

91. Summarize the relationship between PPC and Copyright Law.

PPC (Pay-per-Crawl) provides a way to compensate publishers at the point of access, while Copyright Law serves as the enforcement mechanism if content is used beyond the agreed terms or without authorization.

92. Why is the debate often framed as a “Zero-Sum Game”?

Publishers worry that each AI-generated summary or derivative work represents a lost paid click or subscription from a human reader.

93. What is the most promising path toward a sustainable content ecosystem for both AI and humans?

Establishing mandatory, standardized licensing ensures AI pays for content while providing clear legal boundaries to protect creators’ rights.

94. What is the definition of AI Hallucination in this context?

AI hallucination is the generation of factually incorrect or nonsensical content, often resulting from low-quality or conflicting training data, making verified sources more valuable.

95. How does the speed of AI development affect the legal process?

AI technology evolves faster than the law can adapt, leading to a perpetual lag where lawsuits address outdated models while newer systems are already in use.

96. What is the long-term risk of information poverty due to paywalls?

High-quality information may become accessible only to those who can afford it, deepening digital and economic divides.

97. How does the Reverse Paywall help solve the Information Poverty problem?

It democratizes access by making premium content available to all users, with costs subsidized by large AI companies rather than individual readers.

98. Why is the value of “Curated Data” exponentially higher than raw data?

Curated data is clean, fact-checked, and categorized, reducing training costs and improving AI performance, making it far more valuable and worth licensing.

99. What is the “Tragedy of the Commons” risk in free content scraping?

The incentive to exploit public web content without compensation leads to depletion of high-quality resources, discouraging new content creation.

100. What is the most brilliant takeaway from our discussion?

The future of content monetization hinges not on keeping AI out, but on finding ways to make AI pay for access, synthesis, and use of human creativity.

Industry Commentary on Pay-per-Crawl (PPC)

101. What is the primary concern for small or niche publishers regarding PPC pricing?

That flat, per-crawl fees (often fractions of a cent) undervalue specialized or authoritative content, yielding only “crumbs” instead of sustainable revenue.

102. How does the Crawl-to-Refer Ratio quantify the publisher’s problem with AI?

It measures the number of pages an AI crawls versus the clicks (referrals) it sends back. Ratios like 1,000:1 highlight a broken economic model where publishers gain little from extensive crawling.

103. What is a key non-monetary benefit of PPC for publishers?

Negotiating leverage. Publishers can block or charge AI crawlers by default, giving them a stronger position to secure direct licensing deals, attribution, or traffic from AI companies.

104. Why are SEO (Search Engine Optimization) experts sometimes concerned about the PPC model?

Default blocking of AI crawlers may make client content invisible or outdated in AI-powered search results and answer engines, negatively affecting visibility goals.

105. What is the argument that PPC could drive higher content quality?

If AI crawlers must pay, they will prioritize high-quality, authoritative, well-structured, and unique content, incentivizing publishers to compete on excellence rather than volume.

106. What technical limitation does PPC currently face regarding content value?

Flat pricing across an entire domain ignores content value differences—e.g., a simple FAQ may cost the same as a Pulitzer-winning investigation.

107. How does PPC put Cloudflare in the position of a Merchant of Record (MoR)?

Cloudflare manages the full payment process—collecting fees from AI companies and distributing revenue to publishers—acting as the key financial intermediary.

108. Why might an AI company be reluctant to use the PPC system?

They can often access large, pre-scraped datasets from sources like Common Crawl for free, making PPC microtransactions seem unnecessary unless the content is essential.

109. What is the publisher’s strategic alternative to PPC for monetization?

Focusing on email registrations and newsletters, trading limited free access for users’ contact information to maintain direct marketing and subscription conversion control.

110. What is the philosophical shift represented by Cloudflare’s new default setting for AI crawlers?

A shift from an “Opt-Out” internet (free by default, requiring manual blocking) to an “Opt-In” internet (blocked by default, requiring explicit permission or payment for access).

Screw AI. Win. Follow the Law:

1. IP FORTIFICATION: Register Now. → Mandatory Statutory Damages: Without Federal registration before infringement, you waive the right to mandatory statutory damages (up to $150k per work for willful infringement). We make the cost of their theft non-negotiable and astronomically high.

2. THE TRAP CLAUSE: → Breach of Contract (Willful): Affix an Explicit Opt-Out Notice and a Liquidated Damages Clause (e.g., “$50,000 per instance of unauthorized use”). This converts the infringement into a Willful Breach of Contract, making their Fair Use defense impossible and justifying the maximum financial penalty.

3. INJUNCTIVE STRIKE: → The Final Demand: File for an injunction demanding algorithmic disgorgement. The threat of forcing the AI company to destroy/retrain their multi-million dollar model will compel an immediate, lucrative settlement and a perpetual licensing deal in your favor. We make the cost of non-compliance exceed their entire market value.

DO NOT WAIT. REGISTER. AFFIX. DEMAND.

Collective legal action is becoming the modern equivalent of a “union” for creators.

What Collective Legal Action Is

- A group of creators (authors, artists, journalists, etc.) joins forces to sue or negotiate with large corporations, like AI companies.

- Legally, this is often done through a class-action lawsuit or a collective licensing body.

- It pools resources—money, legal expertise, and bargaining power—to achieve outcomes that small individual creators could never accomplish alone.

2. How It Mirrors a Union

| Traditional Union | Collective Legal Action for Creators |

|---|---|

| Workers band together to negotiate wages, benefits, and working conditions. | Creators band together to demand fair compensation and licensing for their content. |

| Collective bargaining leverages the power of numbers. | Collective lawsuits leverage the aggregated legal weight of many rights holders. |

| Protects individuals from being exploited by powerful employers. | Protects small creators from exploitation by AI companies that scrape content at scale. |

3. Benefits

- Shared Legal Costs: Expensive lawsuits become affordable.

- Bargaining Power: AI companies are more likely to settle or agree to licensing when facing hundreds or thousands of content owners.

- Standardization: Helps create uniform contracts, fees, and licensing practices across the industry.

- Visibility & Influence: A collective voice can push for legislation or policies that protect creators more effectively than individual efforts.

4. Limitations

- Less flexibility for individual negotiation—you follow the group’s strategy.

- Requires organization, trust, and coordination among members.

- Outcomes depend on the legal system and courts, which can be slow.

💡 Bottom line: Collective legal action is essentially a modern, digital-age union for creators, designed to level the playing field against giant AI and tech companies. It doesn’t replace traditional unions entirely, but it gives small creators the power of scale they need in the AI era.

Comparison of Web Monetization Models. Adsense vs Zero search clicks vs pay per crawls

| Feature | AdSense / Ad Revenue | Zero Search Clicks | Pay-Per-Crawl (PPC) |

|---|---|---|---|

| Monetization Source | User attention / clicks | Search engine knowledge | AI data acquisition / training |

| Revenue Model | Pay-Per-Click (PPC) or Cost-Per-Mille (CPM) from advertisers | Loss of revenue from user clicks and ad impressions | Direct micro-payments from AI companies per page accessed |

| Who Pays | The advertiser | The publisher (via lost traffic/revenue) | The AI company (e.g., Google, OpenAI) |

| Publisher’s Goal | Maximize page views and time on page to serve more ads | Mitigate traffic loss and find new revenue streams (e.g., subscriptions) | Monetize data rights directly, regardless of human readership |

| The Conflict | The engine of the original web economy | Search engines answering queries directly on the SERP | AI scraping content to answer queries directly |

The future of SEO and content creation is that search engines and chatbots still need to understand content—but Google and other AI systems also use that same content to generate answers, summaries, and products without compensating creators. The only reliable way to make AI companies pay for training and information is to put full content behind a paywall. Creators can still provide short descriptions and keyword-rich summaries for indexing, while keeping the complete text protected. In a sense, this approach brings keywords back as an important ranking factor.

Give them something to E-E-A-T and keep the best for yourself and visitors who are willing you to support.

again:

The future of content monetization hinges not on keeping AI out, but on finding ways to make AI pay for access, synthesis, and use of human creativity.

Disclaimer: This content was generated or assisted by an artificial intelligence Gemini model. While every effort has been made to ensure accuracy and relevance, please verify facts and interpretations independently. Pics by Gemini.